What AI Detector Do College Admissions Use to Spot AI-Generated Essays. Curious about AI Detector tools colleges use? See how college admissions spot AI-generated essays in seconds.

Methods Used by Admissions for What AI Detector Do College Admissions Use to Spot AI-Generated Essays

College officials apply varied strategies in their quest to spot AI-generated essays efficiently. Each selection committee typically blends manual review with automated checks. Reviewers evaluate writing style, coherence, & creative depth first. Then they upload documents to an AI detector platform to analyze linguistic footprints. This two-tiered approach ensures solid judgment. Committees also compare the submitted work to past writing samples or standardized test prompts when available. That practice highlights abrupt shifts in tone or vocabulary that often arise when software composes text.

Recruiters have grown aware that some students may attempt to evade scrutiny by editing algorithmic output. To counter that, applications now include supplemental short-answer questions under tight time constraints. The limited duration reduces the chance for users to generate responses externally. As a result, admissions panels can measure authentic writing ability directly. By cross-referencing timed responses against essays processed through automatic detection tools, contradictions become immediately apparent.

Core Algorithm Techniques

- Statistical analysis of word frequency deviations

- Detection of unnatural punctuation patterns

- Evaluation of sentence complexity metrics

- Semantic coherence scoring

Criteria Monitored in AI-generated Essays

Committees prioritize several key markers when they examine essays for signs of automation. They track coherence consistency, looking for unnatural leaps between ideas that typical human writers avoid. They test vocabulary usage for improbable synonyms or overly technical phrases that break narrative flow. Grammatical unpredictability also reveals algorithmic origin: AI often produces near-perfect syntax with inconsistent article usage or errant subtlety in pronoun reference.

Another critical factor involves concept repetition. Automated systems tend to reuse specific terms excessively when generating content. That repetition stands out against the varied lexicon students normally employ. Panels also measure emotional resonance. Essays that lack genuine feeling missing personal anecdotes or authentic reflection trigger deeper checks. With these indicators combined, the system highlights potentially synthetic submissions for closer human scrutiny.

| Monitored Criterion | Description |

|---|---|

| Lexical Variety | Measures diversity of word choices across the essay |

| Concept Coherence | Assesses logical flow between paragraphs |

| Emotional Depth | Evaluates presence of personal voice & anecdotes |

| Punctuation Patterns | Detects unnatural arrangements of commas & semicolons |

Role of machine learning Models in Detection

Colleges rely heavily on machine learning to flag suspicious submissions. These systems train on datasets containing genuine student writing & essays generated by common AI services. As a result, classifiers learn subtle differences in style, pacing, & argument structure. They assign a probability score to each essay, indicating how likely it originated from an algorithm rather than a human mind.

Detection engines continuously update their knowledge base by ingesting new writing patterns from the latest AI models. This practice helps them adapt as language generators evolve. Admissions officers then view highlighted text segments that triggered high-risk scores. They assess sample passages manually & decide whether to request further verification or schedule an interview to confirm the applicant’s authentic voice.

Popular ML Architectures

- Transformer-based classifiers trained on large text corpora

- BERT derivatives tuned for academic writing analysis

- Recurrent neural networks focusing on syntactic trends

- Ensemble models combining multiple algorithm outputs

Integration of Detection Tools in Admission Workflow

Admissions teams weave detection solutions into each review stage. First, applicants submit essays through the portal. The platform auto-runs the text against an AI detector engine instantly. If the score crosses a set threshold, the system flags it for committee review. Next, human evaluators assess flagged samples without referencing score details to maintain impartiality. Those reviewers look for inconsistencies between the applicant’s voice in essays & previously submitted short responses.

During committee meetings, flagged cases receive special attention. Panels discuss whether the candidate demonstrated critical thinking & reflection beyond algorithmic potential. If doubts persist, officers may request a live writing prompt or interview with writing exercises. This structured integration safeguards fairness while enabling rapid high-volume screening of applications.

| Workflow Stage | Detection Action |

|---|---|

| Submission | Automated AI screening |

| Initial Review | Human assessment comparing tone & style |

| Committee Discussion | Flagged essay deliberation |

| Verification | Live writing evaluation or applicant interview |

Educating Reviewers to Identify AI-generated Essays

Universities organize workshops for staff & faculty to sharpen their detecting skills. During these sessions, experienced instructors demonstrate how to catch unnatural variations in linguistic rhythm or sudden topic shifts. Participants examine side-by-side examples of student writing versus AI text. They discuss pattern recognition techniques & the importance of cross-validating against other submitted materials.

Training also covers recent advances in generative models, helping reviewers anticipate new evasion tactics. Instructors guide attendees through live tool demonstrations, showing how to interpret risk scores effectively. By equipping committees with both technical & contextual knowledge, universities foster a culture of vigilance & fairness in the admission process.

Training Methods

- Hands-on detection tool simulations

- Comparative analysis of genuine & AI texts

- Guided discussions on ethical considerations

- Periodic refresher courses aligned with AI updates

Challenges Encountered in spot AI-generated essays

Detecting algorithmic writing presents several hurdles. High-quality generators continually improve their mimicry of human style, making identification harder. Some systems refine outputs by imitating student idiosyncrasies, such as inconsistent grammar or casual tone. That capability complicates traditional signature-based tracking.

Admissions teams also face false positives. Certain applicants naturally adopt formal or technical vocabulary that resembles AI output. Overly relying on risk scores risks unfairly condemning genuine essays. Committees must calibrate thresholds carefully to avoid rejection of capable students. On top of that, maintaining reviewer morale during lengthy deliberations on marginal cases remains an ongoing concern.

| Detection Challenge | Impact on Admission |

|---|---|

| High-Fidelity Generators | Reduced distinction between AI & human text |

| False Positives | Potential unjust application rejections |

| Rapid AI Evolution | Constant need for tool updates |

| Reviewer Fatigue | Increased error rate in manual checks |

“Human judgment remains critical even as tools advance. Careful evaluation prevents misclassifying genuine student work.” – Forest Upton

Strategies for Mitigating Detection Risks

Advisors coach applicants to emphasize personal narrative & specific details. Encouraging students to integrate real-life anecdotes & precise examples reduces algorithmic mimicry. Admissions panels recommend drafting essays on paper first, then typing them to maintain organic flow. That habit introduces natural variations unlikely to align perfectly with automation patterns.

Another tactic involves requesting that applicants discuss recent experiences, current events, or course-specific topics. Those prompts demand up-to-date knowledge & personal insights. AI tools may struggle to incorporate recent developments or subjective interpretations effectively. By tailoring questions to individual contexts, reviewers increase the likelihood of detecting unauthentic content.

Recommended Practices

- Use personalized prompts focused on applicant experiences

- Limit essay word counts to manageable lengths for manual review

- Incorporate timed in-person writing elements

- Request sample drafts or outlines to compare writing evolution

Future Developments in AI detector Technology

Research teams plan to integrate behavioral biometrics into reading sessions. By tracking keystroke dynamics, they can verify whether the same individual authored short responses & longer essays. That addition promises unprecedented accuracy in confirming authorship. Combined with refined language models trained on domain-specific academic texts, next-generation tools will deliver higher recall rates.

Another innovation involves deploying adversarial networks that generate text designed to fool detection engines. By evaluating those challenging samples, researchers strengthen classifiers against new evasion schemes. Ultimately, admissions offices will access adaptive systems that self-improve through continuous feedback from human reviewers, ensuring a resilient defense against emerging AI capabilities.

| Emerging Technique | Potential Benefit |

|---|---|

| Keystroke Dynamics Analysis | Verifies typing patterns across submissions |

| Adaptive Adversarial Training | Enhances robustness against novel AI text |

| Contextual Topic Modeling | Assesses depth of subject matter knowledge |

| Real-Time Feedback Integration | Allows human reviewers to refine detection rules |

Balancing Fairness & Rigor

Admissions committees must preserve equity while applying vigorous automatic detection tools. Policies now require transparent communication with applicants about plagiarism & AI usage rules. Candidates sign honor pledges, acknowledging awareness of prohibited assistance. That ethical framework encourages honest behavior & clarifies consequences.

Universities also standardize appeal processes for students flagged by the system. Applicants may submit explanations or alternate writing samples to contest findings. This balanced approach ensures detection tools support, rather than replace, human judgment. By combining technological rigor with procedural fairness, colleges uphold integrity across their review pipelines.

Fairness Measures

- Transparent AI usage policies in admission guidelines

- Honor pledges requiring student acknowledgment

- Appeal options for flagged essays

- Regular audits of detection accuracy & bias

Collaborations Shaping college admissions Tools

Higher education institutions partner with technology firms & academic researchers to refine detection methodologies. Joint working groups meet quarterly to exchange insights on evolving generative models & fresh evasion tactics. Such collaborations accelerate the development of specialized datasets that better reflect the nuances of applicant essays.

These partnerships also yield standardized benchmarks for tool performance. Schools share anonymized samples to evaluate detection engines against real-world submissions. By pooling resources, the community ensures that improvements benefit all participating colleges. That cooperative model drives rapid progress & promotes consistency in scoring across diverse review boards.

| Partner Type | Contribution |

|---|---|

| EdTech Companies | Provide software development expertise |

| Academic Labs | Offer research on latest language models |

| University Committees | Share real application samples |

| Policy Groups | Create ethical guidelines |

Key Metrics for Continuous Improvement

Admission offices monitor various performance indicators to assess detection efficacy. They track the false positive rate, flag-to-confirmation ratio, & appeal success percentage. High false positives require threshold adjustments, while low confirmation rates suggest flaws in training data. Regular metric reviews guide calibration & tool updates.

And another thing, committees survey applicant experiences to gauge perceived fairness. Feedback highlights areas needing clearer communication or procedural tweaks. By analyzing both quantitative & qualitative data, institutions refine their use of AI detector systems, ensuring they remain accurate, unbiased, & trusted by all stakeholders.

Performance Indicators

- False Positive Rate (FPR)

- Flag Confirmation Ratio

- Appeal Resolution Time

- Applicant Satisfaction Scores

Key Features of Popular AI Detection Tools

| Tool | Primary Feature |

|---|---|

| Turnitin AI | Writing pattern analysis |

| GPTZero | Perplexity scoring |

| Copyleaks AI Detector | Text originality scores |

| Originality.AI | Probability metrics |

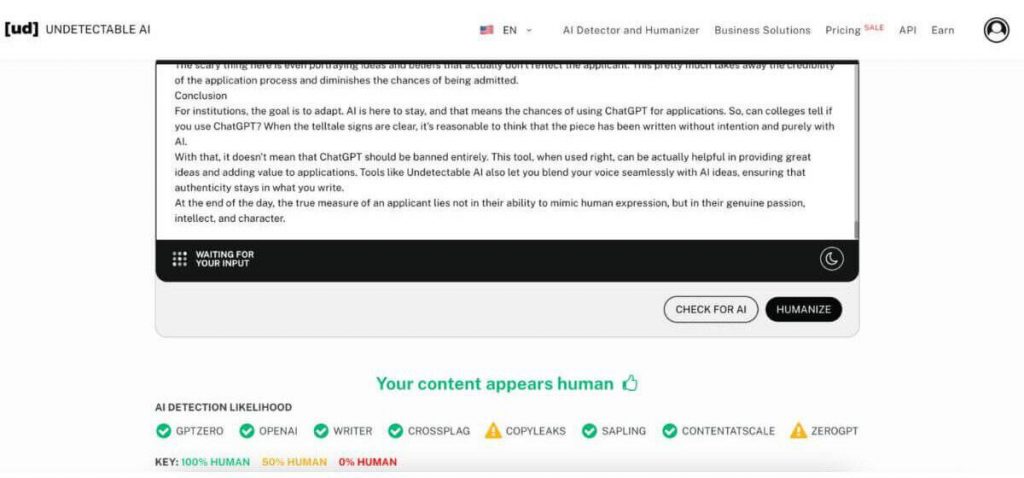

When admissions officers ask What AI Detector Do College Admissions Use to Spot AI-Generated Essays, they often start with a clear view of each tool’s unique metrics. Each AI Detector scans essays & highlights sections that match common machine writing patterns, grammar structures or predictable phrasing. Institutions rely on these key features to form a baseline for human review. Some tools focus on high-level metrics like perplexity, which scores how surprising a word choice appears. Others track originality percentages by comparing submissions to large data banks. Combining these metrics helps College Admissions teams flag suspect sections while still evaluating creative expression. Detectors mark shifts in tone & vocabulary that often accompany automated content. By reviewing results, admissions teams can decide if an essay reflects genuine insight or automated output. Understanding key features guides staff toward fair, consistent decisions about AI-Generated Essays without depending on a single indicator.

How Turnitin’s AI Writing Detection Works

The detection process at Turnitin blends multiple checks to gauge writing authenticity. It begins by scanning documents for repeated patterns that align with known AI replies. Next, it measures sentence complexity & vocabulary range against typical human benchmarks. Finally, it cross-references text against an extensive database of academic submissions & web sources.

- Pattern Recognition: Highlights matching AI text segments.

- Complexity Analysis: Rates sentence structure variance.

- Database Comparison: Compares with previous essays.

- Alert Generation: Flags suspicious paragraphs.

- User Dashboard: Provides visual detection summaries.

Admissions teams value Turnitin’s method because it brings clear, repeatable results for reviewing AI-Generated Essays. Staff can quickly filter out high-risk applications & prioritize human review. This process supports consistent evaluations across large applicant pools & integrates well into existing plagiarism checks.

Examining GPTZero in Admissions Screening

| Aspect | GPTZero Approach |

|---|---|

| Scoring Model | Perplexity & Burstiness |

| Speed | Seconds per page |

| Visualization | Heatmaps showing anomalies |

| Customization | User-defined thresholds |

GPTZero attracts attention when discussions turn to What AI Detector Do College Admissions Use to Spot AI-Generated Essays. It offers rapid evaluation by focusing on how predictable text segments appear. Low perplexity values often signal AI involvement, while higher burstiness shows varied pacing & word choice typical of a human. Admission officers can adjust detection thresholds to suit their institution’s risk tolerance. The tool’s heatmaps display color-coded signals right in the document, making it easy to see which sentences merit closer reading. This method supports quick triage of large application volumes. By logging detailed metrics, College Admissions staff track emerging writing patterns & spot essays that warrant in-depth review.

Copyleaks AI Detector: A Deep Dive

Copyleaks combines machine learning models with linguistic analysis to detect AI-originated passages. First, it checks for unusual n-gram sequences rarely found in human writing. Next, it applies probability metrics to each sentence to calculate the chance it came from an algorithm. Finally, it compiles a comprehensive report that scores overall authenticity.

- Sequence Analysis: Tracks phrase frequencies.

- Probability Scores: Assigns percent-based authenticity checks.

- Context Matching: Examines adjacent sentences for coherence.

- Multi-language Support: Works beyond English.

- API Integration: Embeds in admission portals.

By integrating Copyleaks into their workflow, College Admissions offices tap detailed reporting to inform essay scoring. The system’s API lets admissions platforms trigger scans automatically, so essays arrive pre-checked. Alerts identify high-probability AI segments, which reviewers then inspect manually. This workflow enhances confidence in decisions about AI-Generated Essays while preserving staff time.

Comparison of AI Detectors Used by Colleges

| Detector | Strength |

|---|---|

| Turnitin AI | Comprehensive database match |

| GPTZero | Fast perplexity checks |

| Copyleaks | Fine-grained probability analysis |

| Originality.AI | Strong analytics dashboard |

When considering What AI Detector Do College Admissions Use to Spot AI-Generated Essays, many select a combination of tools to balance speed, depth & accuracy. Turnitin excels at matching past submissions, while GPTZero quickly flags potential AI text. Copyleaks & Originality.AI round out the mix with detailed probability models & robust user interfaces. Colleges draw from this lineup to build multi-tier review systems. Fast scans filter out obvious cases, & deeper checks serve as secondary gates. This layered approach improves reliability in identifying suspect sections without overwhelming reviewers.

“I rely on layered detection systems to ensure fair evaluation of every submission, especially as AI writing evolves,” said Mr. Geovany Smitham.

Criteria Admissions Officers Use to Flag Essays

Admissions reviewers examine multiple criteria to decide if an essay needs further AI checking. They look for sudden shifts in tone mid-paragraph, overly uniform vocabulary, or unusually complex sentence structures that seem out of character compared to typical student writing. Suspicious formatting, like consistently precise punctuation or lack of personal anecdotes, raises further questions. Officers compare application materials against other submissions from the same student, checking for consistency in style & voice. They also consider essay length against word count patterns flagged by detectors. When multiple signals align, staff initiate a deeper scan using advanced AI Detector capabilities. This manual step blends human insight with automated alerts to verify whether passages came from AI-Generated Essays rather than from the applicant’s genuine effort.

- Tonal consistency across paragraphs

- Vocabulary complexity tracking

- Formatting uniformity checks

- Personal anecdote presence

- Length vs. word count anomalies

Accuracy & False Positives in AI Detection

| Factor | Impact on False Positives |

|---|---|

| High Vocabulary Variance | May trigger alerts |

| Technical Writing | Increases false flags |

| Short Essays | Less reliable metrics |

| Slang Usage | Reduces accuracy |

Accuracy remains a core concern when colleges share insights on What AI Detector Do College Admissions Use to Spot AI-Generated Essays. False positives occur when authentic student work resembles patterns that detectors link to algorithmic writing. Technical or research-heavy essays often score low perplexity values because they use specialized terms. Short responses can distort burstiness measures & mislead the system. To counteract mistaken flags, admissions teams calibrate thresholds & pair automated checks with manual review. By monitoring false positive rates & adjusting detection rules, staff maintain fair assessments & avoid penalizing genuine expression.

Ethical Implications of AI Essay Scanning

Implementing automated AI Detector scans raises ethical questions about fairness & transparency. Students may feel uneasy if they sense hidden algorithms judging their work. Colleges must balance technology’s efficiency with respect for applicant privacy. In many cases, institutions publish policies explaining how they use detectors on admissions essays. This transparency builds trust & clarifies expectations around AI-Generated Essays. Ethical use also requires ongoing audits of detection accuracy & bias. Staff should receive regular training on interpreting results to avoid overreliance on flags. Clear guidelines ensure admissions officers apply human judgement alongside algorithmic signals, preventing mechanical rejection of genuine essays.

- Transparency in detection policies

- Regular bias audits

- Balanced human-machine review

- Privacy safeguards for applicants

- Clear communication of expectations

Guidelines for Students to Maintain Authenticity

| Tip | Benefit |

|---|---|

| Use personal stories | Unique voice |

| Vary sentence length | Higher burstiness |

| Proofread yourself | Reduces uniformity |

| Limit jargon | Matches human style |

Students preparing essays for College Admissions can take steps to avoid false AI flags. Starting with genuine personal anecdotes helps establish a distinct voice. Mixing short & long sentences prevents uniform pacing, which detectors often link to machine scripts. Self-editing rather than relying on automated tools preserves organic phrasing & shows individual style. Steering clear of excessive technical terms or academic jargon reduces risk, since detectors sometimes misinterpret specialized language as AI-generated. By following these guidelines, applicants showcase their true abilities without tripping alerts meant for AI-Generated Essays.

Future Trends in AI-Generated Essay Detection

Detection technology continues evolving with new algorithm updates. Institutions forecasting What AI Detector Do College Admissions Use to Spot AI-Generated Essays expect features that analyze semantic coherence & emotional tone. Machine learning models will grow more adept at tracking narrative arcs & voice consistency. Integration with natural language understanding tools can spot nuanced shifts in argument flow that older detectors miss. Some campuses plan to pilot peer review modules assisted by AI, where flagged sections undergo collaborative human analysis. As AI writing tools improve, detectors will harness neural networks trained on ever-larger data sets to stay ahead. Admissions teams will need to update their detection stacks regularly, ensuring fair evaluations & adapting to novel writing assistance platforms.

- Semantic coherence checks

- Emotional tone analysis

- Collaborative review interfaces

- Neural network enhancements

- Continuous model retraining

Incorporating AI Detection Results in Admissions Decision

| Stage | Action |

|---|---|

| Initial Screening | Automated flagging |

| Secondary Review | Manual essay reading |

| Committee Discussion | Flagged cases debate |

| Final Decision | Holistic evaluation |

When a submission triggers an AI Detector alert, admissions staff follow a multi-stage process. Automated scans provide the first filter. Then, readers conduct in-depth manual reviews of flagged paragraphs, focusing on context & personal relevance. If concerns persist, the admissions committee discusses those cases collectively. They consider other materials, such as teacher recommendations & test scores, before reaching a conclusion. Final decisions rest on a holistic assessment, ensuring no single AI alert dictates an outcome.

Training Admissions Staff on AI Tools

Effective use of AI Detector platforms requires well-trained personnel. Colleges design workshops covering each tool’s interface & result interpretation. Participants learn to read probability metrics, perplexity scores & heatmap outputs. Role-playing exercises simulate essay reviews, challenging staff to decide between human writing & automated text. Ongoing refresher sessions help teams adapt to algorithm updates & avoid skill atrophy. Feedback loops collect real-world detection data, guiding future training modules. By investing in education, College Admissions offices ensure consistent, fair use of technologies that screen for AI-Generated Essays.

- Hands-on tool demonstrations

- Result interpretation workshops

- Simulated essay review drills

- Regular update briefings

- Feedback-driven curriculum

Legal Considerations in AI Essay Analysis

| Legal Aspect | Key Concern |

|---|---|

| Data Privacy | Storing personal essays |

| Disclosure Obligations | Informing applicants |

| Intellectual Property | Ownership of analysis |

| Bias Audit | Non-discriminatory use |

Before deploying a new AI Detector, institutions review data privacy laws & candidate rights. They develop policies that specify how long essays remain on servers & who can access results. Disclosure requirements may mandate informing applicants about the use of automated tools. Legal teams also assess potential biases that could arise if detectors misinterpret writing styles tied to cultural or linguistic backgrounds. Documenting these reviews protects colleges from disputes & ensures ethical handling of AI-Generated Essays detection data.

Impact on Student Writing Practices

The rise of automated AI Detector checks influences how students approach essays. Many now draft with greater attention to personal perspective & anecdotal detail, which helps avoid algorithmic red flags. Some educators encourage peer feedback sessions to foster authentic tone & varied syntax. Writing centers update their guidance to cover effective human editing rather than reliance on text generators. As students adapt, they develop stronger self-editing skills that produce natural voice patterns. Overall, these shifts support enhanced writing proficiency while reducing the likelihood of false AI detection.

- Stronger focus on personal perspective

- Peer feedback for authentic tone

- Writing center workshops on human editing

- Balanced use of external tools

- Emphasis on narrative diversity

I once prepared a scholarship application & used an AI writing assistant to refine my draft. After uploading it to a detection tool, I noticed a high probability score for AI-generated content. I then rewrote several sections manually, added personal anecdotes, & restructured odd phrasing. When I re-scanned the essay, the alert vanished. That experience taught me the value of genuine voice & careful self-editing in the admissions process.

Conclusion

Many colleges rely on tools like Turnitin’s AI writing detection & GPTZero to flag essays that look computer made. These tools scan writing style, word choice, & sentence flow to find patterns that might not match a student’s normal voice. Admissions teams use this information as a starting point for follow up checks. They compare flagged essays with classwork & ask questions if something seems off. While no method is perfect, these tools help reviewers spot possible AI use quickly & fairly. Students can focus on honest writing to show their own thoughts & skills in every essay they send.